Principal components from PCA were computed on Clip-ViT-B-32 embeddings... | Download Scientific Diagram

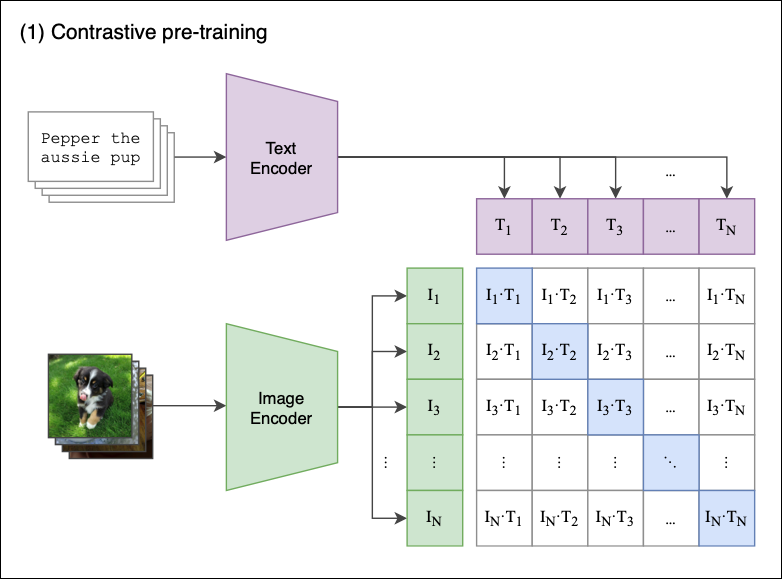

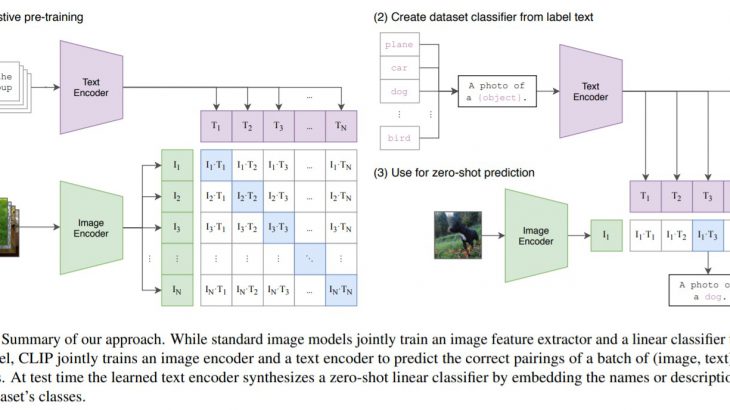

Review — CLIP: Learning Transferable Visual Models From Natural Language Supervision | by Sik-Ho Tsang | Medium

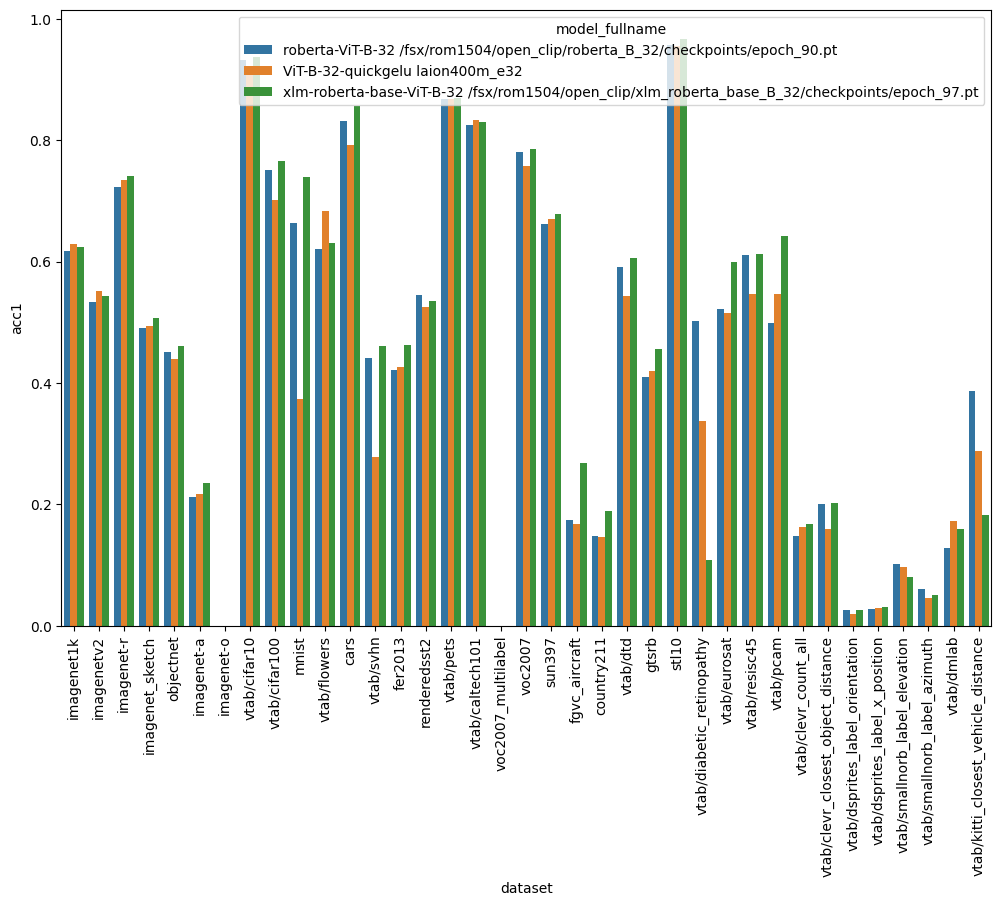

Romain Beaumont on Twitter: "@AccountForAI and I trained a better multilingual encoder aligned with openai clip vit-l/14 image encoder. https://t.co/xTgpUUWG9Z 1/6 https://t.co/ag1SfCeJJj" / Twitter

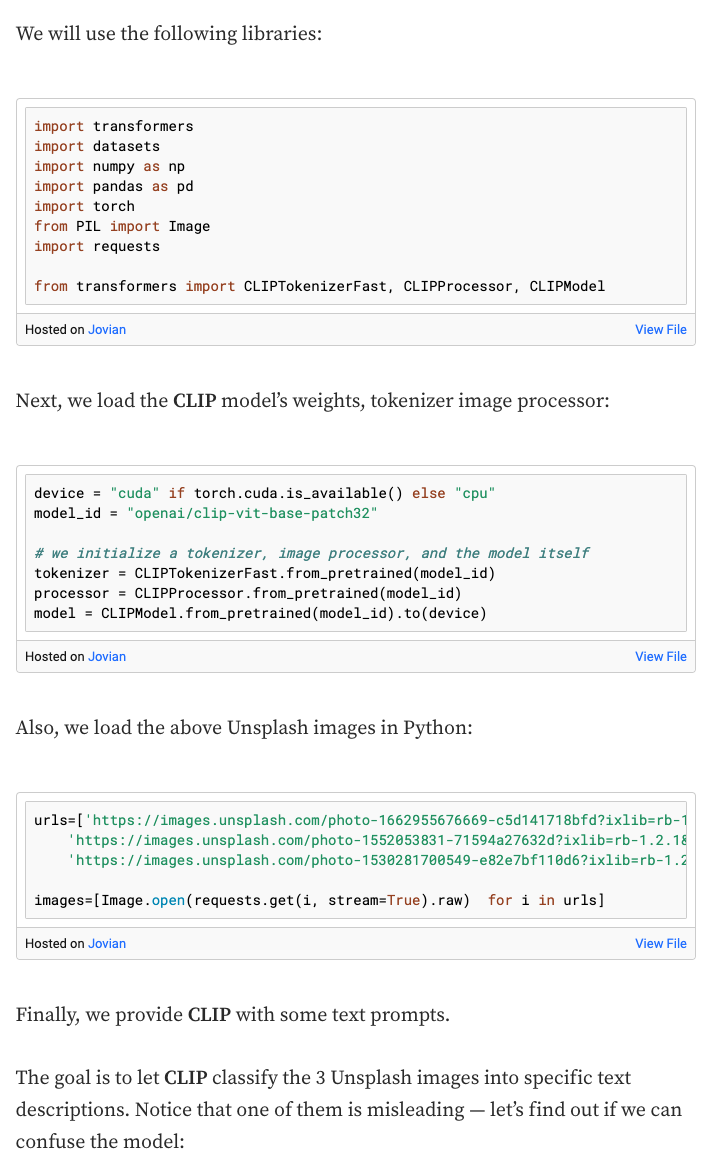

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

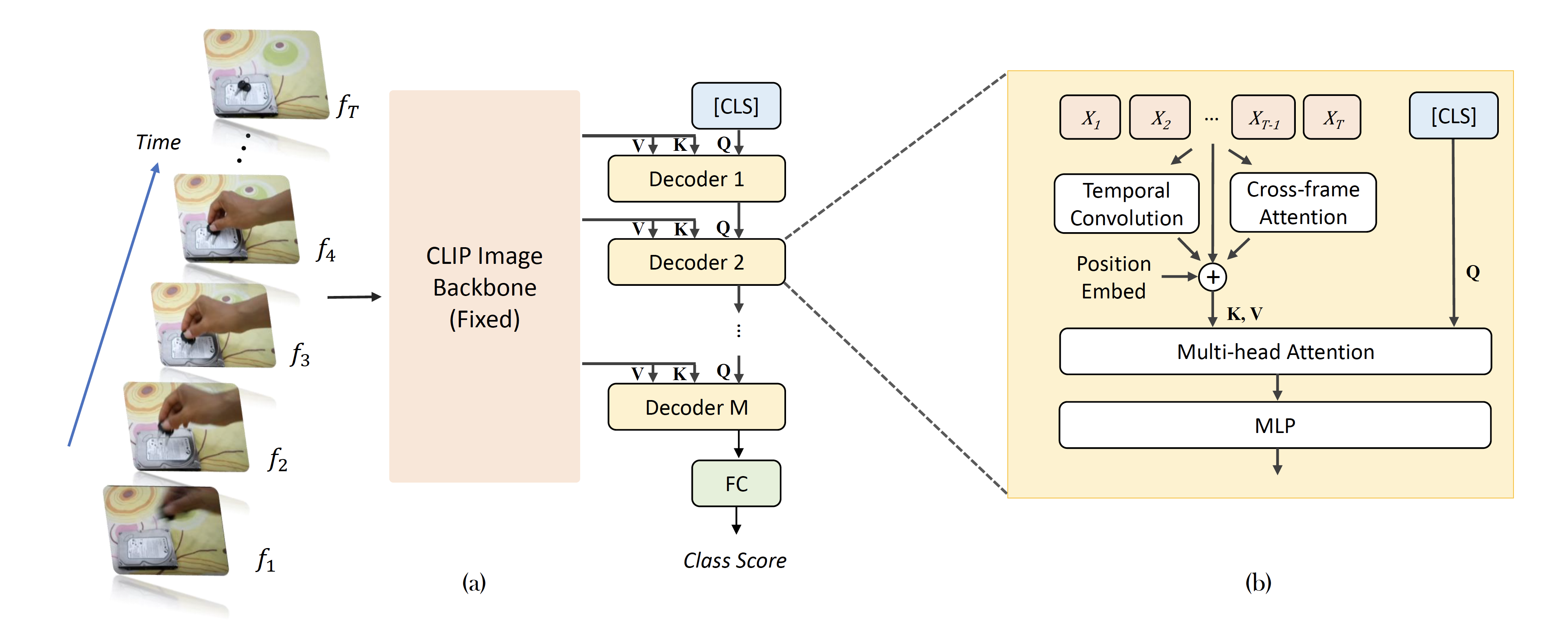

CLIP Itself is a Strong Fine-tuner: Achieving 85.7% and 88.0% Top-1 Accuracy with ViT-B and ViT-L on ImageNet – arXiv Vanity

apolinário (multimodal.art) on Twitter: "Yesterday OpenCLIP released the first LAION-2B trained perceptor! a ViT-B/32 CLIP that suprasses OpenAI's ViT-B/32 quite significantly: https://t.co/X4vgW4mVCY https://t.co/RLMl4xvTlj" / Twitter

![WebUI] Stable DiffusionベースモデルのCLIPの重みを良いやつに変更する WebUI] Stable DiffusionベースモデルのCLIPの重みを良いやつに変更する](https://storage.googleapis.com/zenn-user-upload/d6c76c2f86ea-20221206.png)